The fight against child sexual abuse

Case Study

Case Study

Child sexual abuse (CSA) is a serious crime with devastating effects on children’s lives. Alarmingly the threat is intensifying due to the explosive growth of Generative Artificial Intelligence (Gen AI). One significant concern is that AI enables offenders to produce and share child sex abuse material (CSAM) easily and at an astonishing rate.

Wendy Hart, Deputy Director for Child Sexual Abuse at the National Crime Agency (NCA) and Ian Critchley QPM, former Deputy Chief Constable and former National Police Chiefs’ Council lead for Child Protection who is now leading the implementation of a National Centre of Public Protection in collaboration with the College of Policing, share the

challenges they face staying ahead of offenders and keeping children safe.

The perpetrators of CSA constantly adapt to groom and abuse. While some are motivated by profit, many are collaborative, quickly sharing techniques online because of their sexual interest in children. Gen AI, now integral to daily life, poses a profound threat as it is being adapted by child sex offenders to create CSAM and convincing deepfakes through

image, text or video generators, making it easier to victimise children and re-victimise children. Guides to generating AI CSAM have been widely shared in dark web forums1. The ease with which AI can be used and abuse material shared on the open web2 is generating increased demand, further spreading the abuse.

Last year, the Internet Watch Foundation (IWF) identified more than 275,000 image referrals concerning child exploitation and the country’s Child Abuse Image Database (CAID)3 contains a staggering collection of over 30 million media files including images and videos.4 Moreover, industry referrals to the National Centre for Missing and Exploited Children (NCMEC), based in the United States, continue to increase globally, up from 22 million in 2020 to 36 million in 2023.5

The Independent Inquiry into Child Sexual Abuse (IICSA) further highlights the severity of the threat.

In 2013, policing recorded 20,000 crimes of child sexual abuse (contact and non-contact), a number which soared to nearly 107,000 in 2022 because of the surge of tech-facilitated CSA.6

This increase is partly because more of us are living our lives online. However, Ian Critchley, who has spent over 30 years working as a top detective combatting child abuse, stresses this is just the tip of the iceberg. He states, “the cases known to us represent only a fraction of the actual prevalence as CSA is chronically underreported.”

It may come as a shock to learn that child-on-child offending is now present in 50% of recorded CSA crimes,7 which Critchley says is fuelled by smart phones and access to violent pornography. “Much of it is high harm. The most common offences committed are sexual assault, rape and taking, making or sharing indecent images.”

However, Critchley is keen to stress that the “greatest risk to children comes from adults”.

The NCA estimates that between 710,000 to 840,000 adults in the UK pose a sexual risk to children which equates to about 1.3% to 1.6% of the adult population.8 One in 6 girls and one in 20 boys will be sexually abused before they are 16.9

Wendy Hart, who works with Critchley to ensure a coordinated response to this threat said, “The shockingly vast number of potential child sex offenders in the UK and the sheer volume of child sexual imagery available online, which makes it more accessible than ever, is deeply troubling.” She warns that it is creating a permissive environment for individuals to develop a sexual interest in children, potentially leading to more severe contact abuse. A report from Protect Children10 has highlighted the link between viewing pornography online and contact offending, with 37% of people stating they have sought to commit an in-person offence after looking at indecent imagery online.

On top of that, offenders are starting to exploit immersive virtual worlds like the Metaverse. There is also a worrying trend of financially motivated sexual extortion11, which has resulted in a string of cases where children have tragically taken their lives.

Traditional methods of identifying CSAM rely on recognising and tracking known images. This innovative technology, used by CAID in the UK, allows for the generation of unique hash values for images and the identification of victims. It has served as the backbone of an international response to CSA for a number of years. However, Gen AI’s ability to rapidly generate new, unique content could pose a threat to this method – and risks wasting law enforcement time trying to identify non-existent victims because AI-generated images cannot be distinguished from real ones.

Yet emerging strategies are being developed to tackle these issues, including the use of AI to combat CSAM. Established in 2014, CAID is now poised for upgrades. Currently, images in CAID undergo a verification process by three individuals before they are classified and deemed evidence ready. A new AI “classifier” is set to be phased in following successful trials to help officers determine the severity of illegal imagery more efficiently.12 At present, officers can grade up to 200 images per hour. With the new image categorisation algorithm, this number could increase to an impressive 2,000 images per hour, as it will sort images before they reach officers. This will significantly reduce the psychological burden on officers too, which is necessary to maintain wellbeing in this challenging environment.

A trial phase for a fast-forensic tool is also in progress.13 This tool is designed to speed up the analysis of images on seized devices at scene, with the goal of significantly reducing police processing time. For instance, with this new tech, a 1TB drive could be processed in as little as 30 minutes –a task that currently takes up to 24 hours.

We need to adopt a more agile and intelligence-led approach which incorporates advanced automation and AI to tackle the overwhelming volume of CSA material. We know AI can be trained to detect inconsistencies in digital content that are often indicative of AI generated or enhanced material. We need to leverage this to both alleviate the emotional toll on officers exposed to distressing content and to increase productivity.

Ian Critchley QPM, former Deputy Chief Constable and the National Police Chief Council’s lead for child abuse protection

In the pursuit of innovation, the police are also progressively advancing their data analytics capabilities to gain deeper insights into criminal behaviours and empower local safeguarding teams on the ground to spot patterns and evaluate potential risks to more children more effectively. A prime example is the Tackling Organised Exploitation (TOEX) programme14 which is improving operations by employing an enhanced data analytics strategy to accurately assess child vulnerability and expose offender networks.

Critchley emphasises there is still work to be done around matrixing which involves mining and organising data to truly understand high risk situations. This will help improve how decisions are made to protect children at pace within a multi-agency environment. He points out that a common thread in every serious child safeguarding practice review is the critical issue of lack of information sharing. “To avert further harm, we must equip multi-agency safeguarding teams with the technological tools needed to improve the initial information leading to a child’s referral. This will support decision-makers in navigating the challenging risk-based decisions that are essential to protect children.”

According to Hart and Critchley, tech companies and law enforcement must unite to reduce the misuse of Gen AI and other emerging technologies in combating CSA. They urge profitable tech giants to enhance child safety and criticise end-to-end encryption (E2EE) for hindering police efforts to protect children.

“Policing will not step away from its duty to pursue and work with partners. While education and parental vigilance are crucial, tech companies bear significant responsibility. They have neglected their moral duty, resulting in child harm and abuse. Existing technology can enhance age verification and block harmful image uploads— tools that could prevent coercion by predators. It’s alarming that companies may be blind to abuse on their platforms. The Online Safety Act15 should not have been necessary to hold them accountable,” said Ian.

The NCA has been spearheading efforts with companies to reconsider the implementation of E2EE on behalf of national law enforcement.

Hart said, “Our duty as law enforcement is to safeguard the public from organized and serious crime. To achieve this, we require access to critical information. We urge platforms to design security systems that can detect illegal activities and report message content to law enforcement.”

Hart points out the successful prosecution of David Wilson, one of the most prolific sex offenders that the NCA has ever investigated, was possible because law enforcement was able to access evidence contained within his messages.

By posing as teenage girls online, Wilson was able to persuade 51 boys into sending indecent images of themselves and in some cases, blackmailed victims into abusing their friends and siblings.16 Hart said, “In an E2EE environment, it is unlikely this case would have been detected.”

Critchley and Hart are working closely with government and online safety regulator Ofcom to ensure platforms have adequate safety measures and to close any loopholes in legislation particularly those allowing the distribution of manuals on creating AI CSAM. “It’s vital that we improve online protections and hardwire safety by design into new industry products to ensure our collective ability to tackle the threat keeps pace with technology,” Hart states.

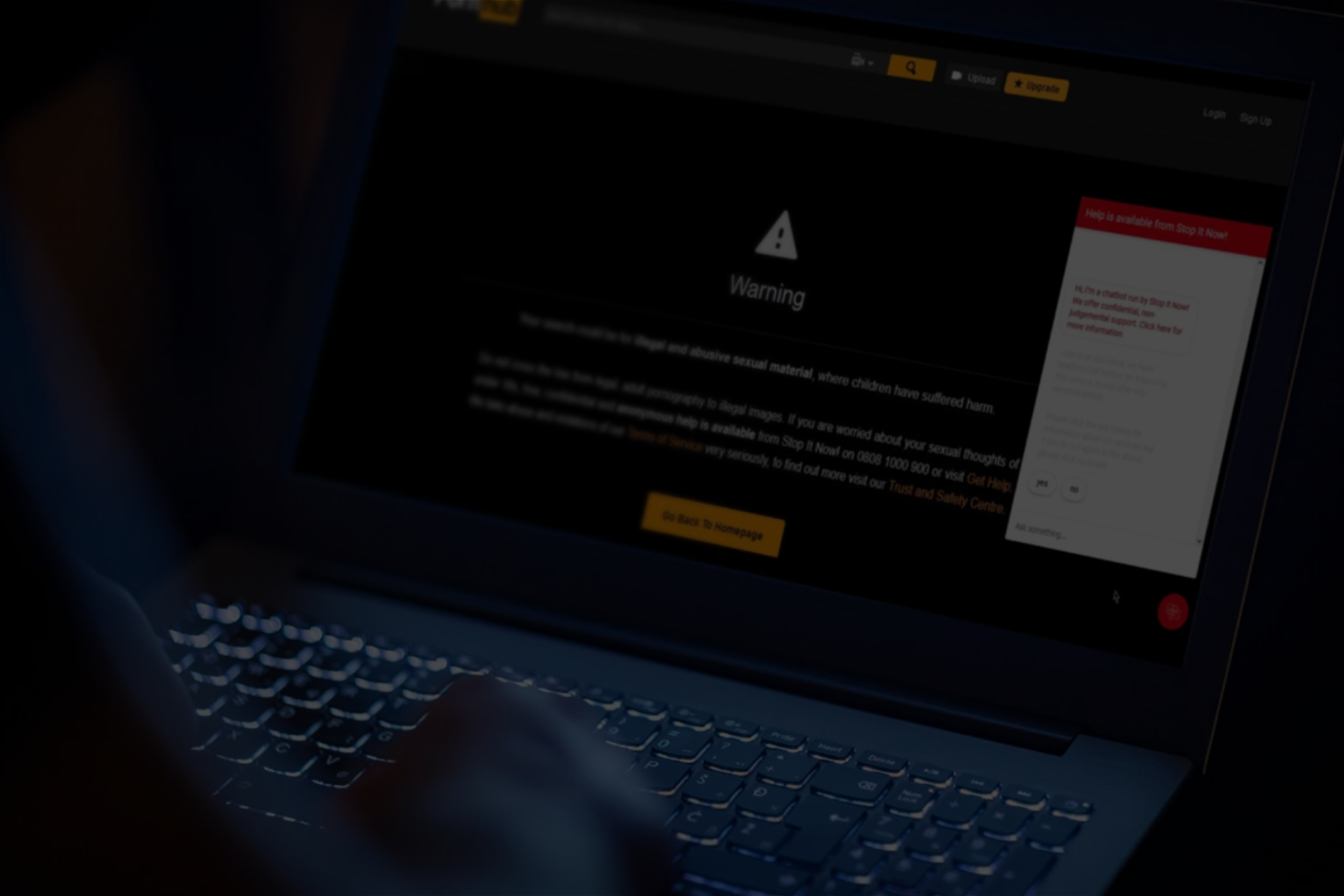

Yet some online platforms are pioneering new solutions to keep children safe. A notable example is the reThink chatbot on Pornhub UK created by The Lucy Faithfull Foundation (LFF) and IWF. The interactive chatbot engages adult pornography users attempting to search for CSAM on Pornhub, offering support services called Stop It Now, provided by LFF. Developed using behavioural research on interventions, the chatbot’s messages were designed to divert users away from CSAM. According to the literature review which assisted in the development of the chatbot, key features of effective warning messages need to attract attention, be easy to understand, be believable, come from a credible source, and impart explicit information about how to avoid harm.17 The more salient the message is, the more effective it is.

There was a statistically significant decrease in CSAM searches on Pornhub UK during this period. Most sessions which triggered the warning and chatbot once do not appear to have searched for CSAM again and those who saw the warning message more than once, tended to switch to non-CSAM searches after receiving the warning.18

“This initiative demonstrates the impact that tech can have in protecting the young and vulnerable by challenging those who may put them at risk. More than that, it can help us better understand the pathways into offending by those who start searching for images of children,” said Hart.

The Safety Tech Challenge19 spearheaded by Government is driving innovation in the safety tech sector but Hart would like to see the AI industry invest in coming up with the next generation of tools that can fight child abuse. These tools must be able to proactively block CSA and detect new unknown content across various media including voice and video, to prevent more victims. Hart remarks, “Once abuse content is out there, it’s very hard to remove it completely, so it’s critical to stop content from spreading in the first place.”

Hart also notes, “While tech is part of the problem, it is also part of the solution. Behind the scenes, we have incredible people working to tackle child sexual abuse and the job exposes them to content that is truly harrowing. By investing in brilliant technology, we can support our brilliant teams in preventing more abuse from happening and protect children.”